How AI Became Our Fifth Co-Author (And What That Taught Me About Collaboration)

Ways to use AI to support the collaborative writing process, without having it just write for you

TL;DR: Four co-authors used AI as a thought partner to assist with writing a peer-reviewed article. Not as a content generator, but as a tool to support collaboration through the publishing process. We co-created our draft in just a few short weeks, but what surprised me wasn’t the efficiency gains. It was discovering how AI could help us see connections between our different expertises and build on each other’s ideas in ways we probably wouldn’t have found on our own. Here’s what we did, what worked (and what didn’t), and what it might mean for collaborative writing.

What if we…?

What if AI could make collaboration better, not just faster? What if it could help four busy people, some who are just meeting, who are scattered across timezones and with different perspectives on a topic write like a team that’s worked together for years? How might AI help us craft (and I use that word deliberately) a piece that showcases our expertise, within the turnaround time, and without just ‘writing’ it for us?

When my co-authors and I set out to write an article for a special issue on AI, I knew it was a chance to experiment. But, I wanted to do it in a way that wasn’t outsourcing the task to AI. I wanted to try to embody what Beth Kanter, Ethan Mollick, and others are writing about in terms of augmenting our process and using the tech to enable something qualitatively different (and perhaps better) than without.

Now that the piece has been published, I want to share what we did. It turned out to be a really interesting lesson in how to augment collaboration, along with the limits of AI. I’m also sharing it in the spirit of publicly documenting what it looks like to truly experiment with AI. I find that for so many people, that’s the second hurdle to getting started (the first is wondering if you can use this tool and still be ethical, human, environmentally conscious, etc.).

The Woes of Academic Publishing

Co-authoring is hard, especially if you’ve left academia, where publishing might be even less valued. It’s challenging to find the time to really sit with one another, hash out the outline, combine multiple voices into a coherent draft, and maintain version control all at once.

For this article, a small group of us jumped on an opportunity to write for The Foundation Review, a popular and trusted source in our field, when they published a call for papers related to AI. Only, we hadn’t all collaborated together before. We were connected in various ways (mostly through Rachel), but we didn’t have a ton of history to pull from.

We had me with my leadership and learning theory lens, Rachel thinking about tech futures, Blanch who actually implements these systems, and Abigail keeping us grounded in equity, org culture, and operations. Four brilliant minds, each seeing our chosen topic — Oral and Alternate Reporting (OAR) with AI — through unique lenses.

I wondered: what if AI could help us actually collaborate better, not just write faster?

Initial Experiment: What If We Started from Shared Ground?

Instead of a more typical approach where everyone does their own research, then we meet to share, someone synthesizes based on notes and sends around a draft, we post comments, and so on, I tried something different. I used Gemini’s Deep Research to conduct a literature review of OAR within philanthropy and each of us as professionals. Then, I used that synthesis to create interview questions tailored to each co-author’s expertise.

Specifically, I gave Deep Research our names, the call for papers, and our accepted abstract and asked it to do the following:

Please conduct research on the concept of Oral and Alternative Reporting (OAR) in philanthropy to see what you can find. Then pair that with research on each of the authors (including myself) and our unique perspectives and experiences. After that, I would like you to suggest how our unique experiences could combine to flesh out the full paper. In order to do that, you should identify a set of interview questions that each of us could reflect on that would provide the fodder for our collaboration. There may be questions common to all of us, and specific questions for each of us based on our background.

I did this back in June and, well, let’s just say my prompting skills for deep research, and its capabilities, have improved since then! But, it was still highly effective!

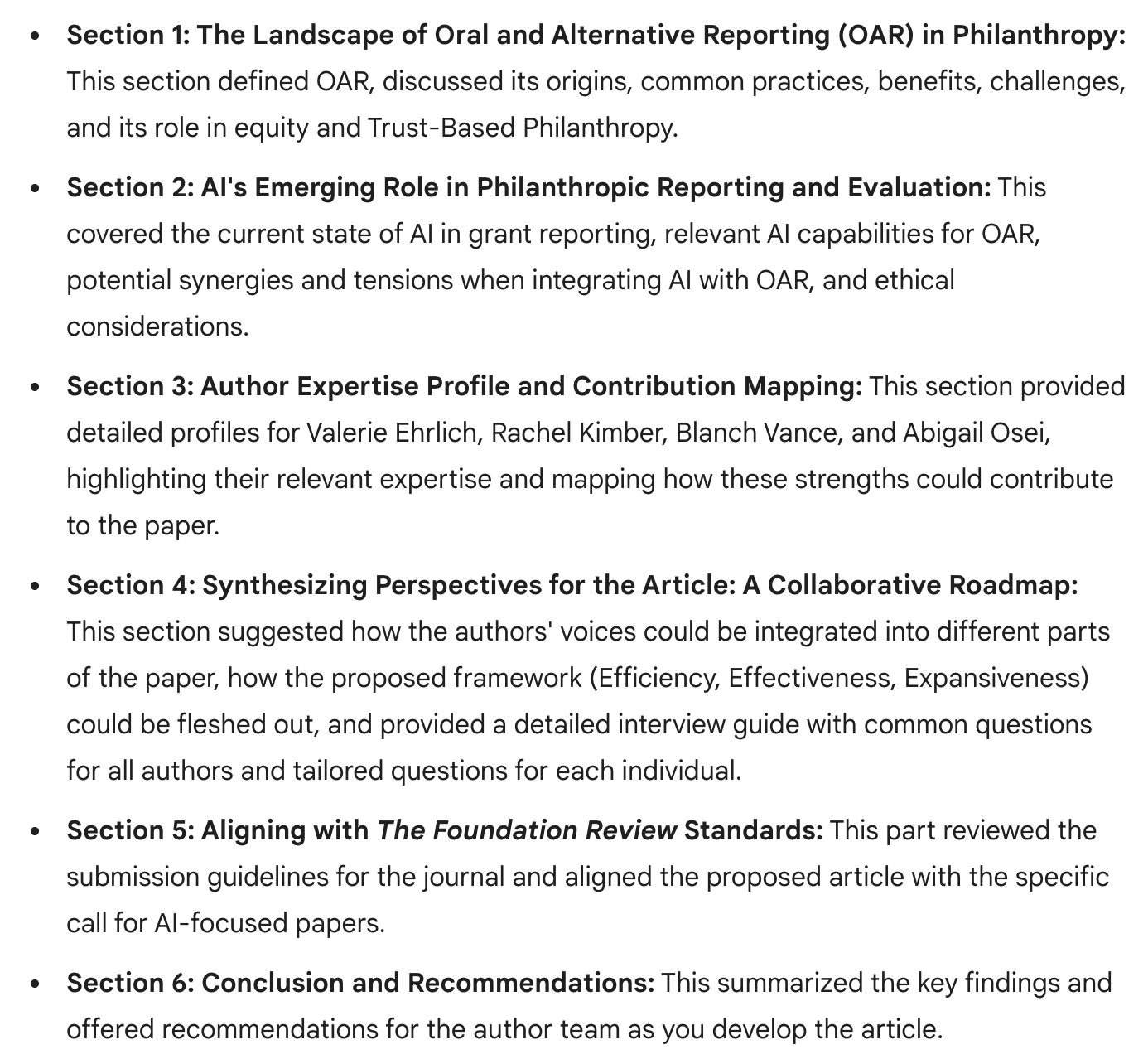

It produced a 27 page document with the following:

I was able to send each author the background research document along with a set of questions for each of us to answer. I realized we weren’t starting from zero. We were starting from a shared understanding that also took into consideration our unique perspectives, and building outward.

The Magic of Async Collaboration (With an AI Assist)

I was intentional about what I asked the AI to do with the interview questions. Instead of asking everyone the same things, I wanted the AI to develop three types of questions:

Common ones everyone could answer through their lens

Expertise-specific questions that would showcase their unique knowledge, perspectives, and experiences

Connection questions that explicitly linked our expertise to each others’

In this way, the LLM hadn’t just organized our thoughts or given us something generic, it had helped us see the connective tissue between our ideas and gave us questions that could further our own thinking about those connections.

We were each able to answer the questions in a shared document. Some of us typed out our thoughts, others used AI to pull in pieces of information across existing writing and presentations, and some used voice memos to ‘talk’ the thoughts and transcribe them and then have AI help clean them up. This turned out to be another benefit! If you’re an introvert like me, you process quietly and usually much after the fact. Having a set of questions I could mull over for a few days was incredibly helpful in getting my deepest thoughts.

And yes, ideally we would have been able to have weekly zoom calls that were 1 hour sessions where we could write and chat and really be in community and collaboration with one another. But, that’s not where we are right now. The reality of our nonprofit and consulting work is that we’re big on ideas but short on time and resources. We had to take this opportunity and make the most of it.

Building a Project Brain in Claude

After generating the research report and interview questions and gathering the answers from each co-author, I switched over to Claude, which is my preferred tool for academic level AI tinkering.

My first task was to set up a project in Claude. Yes, I’m in the camp that would say if you aren’t using Projects, Gems, or GPTs, you're not really leveraging AI to it's fullest. I wanted a project space where I could put all of our working files, and then engage in prompts and conversations to revise and refine the sections of our paper as needed. This was a great use of the ‘projects’ feature.

The context files for my project included:

- Each of our interview answers

- The original deep research report

- Our accepted abstract

- Notes from one of our first calls together where we talked through our vision for the paper

- The original call for abstracts

- The publication’s author guidelines

Bonus: I asked Claude to also conduct a scan of the most popular articles from the publication and identify things they had in common and what tips authors should consider if they want to resonate with the readership. I included that summary in the context files as well. And this is where things started to get fun. With this context and the rich fodder from our collective brains, I had a lot to work with.

Pulling the Thread

First, I asked Claude to analyze our first messy and scattered outline with some draft content against the supporting materials (primarily our interview answers and the literature review) and suggest structural improvements to the outline. It suggested things like splitting one our sections to improve clarity, expanding another section to allow for more depth. It also identified where we had some missing critical elements based on our vision for the piece, and even pushed us to do better on our equity framing.

Reshuffling the Pieces

Then, I asked Claude to comb through our interview answers and begin attaching our ideas to the appropriate places in the outline of our paper. It did a great job at this. It produced a very thorough artifact that had taken all of the thoughts we’d shared in our interview responses and placed them in the relevant section of the paper, while also noting who had said what so that we could go back for questions and follow-up and so we could check its work.

This took some pushing and prodding. Claude was frequently telling me it had reviewed everything when I knew it hadn’t. Or it told me it was referencing a document I’d appended when it hadn’t. I caught these errors during the process and was able to prompt it to try again. Importantly, this was Claude of June 2025 (Opus, 3?) I suspect that the Claude of December 25, whether it be Opus 4 or even Sonnet, would do much better and this issue would be less of a concern. But this is something you always have to be careful about, and it’s an awareness you build the more you experiment and play with AI.

Pre-Gaming Peer Review (Or: How to Be Your Own Reviewer #3)

That pinged my brain to also look for gaps, and I asked it to now take a look at what we had considered and where we had gaps and needed to do more work to support our outline.

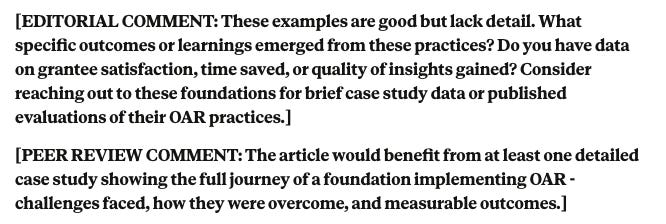

After some additional iteration with my collaborators, we had a solid rough working draft. My next task was to try to be proactive and anticipate reviewer feedback before sending it out for review (h/t to my former colleague, Jeff Kosovich, for the idea to do this!). Because while everyone’s least favorite reviewer always seems to be reviewer number 3, I thought Claude and I could do better.

I prompted:

Now, I’d like you to take a step back. Act as an editor and a peer reviewer. Based on what we have, please indicate in the artifact where we need to strengthen our argument, find more resources, add more detail, and what peer reviewers might suggest.It produced an artifact that had our messy draft but in each section inserted editor and peer reviewer comments and feedback. Here’s an example of what that looked like:

Asking it to adopt the peer reviewer persona caught that our framework needed stronger theoretical grounding. It also caught that to be practitioner focused (and thus a more effective article for the journal’s readership) we were too abstract and needed to be more concrete and actionable in our recommendations. Ultimately, this feedback led to us refining our core framework in really critical ways, which was a huge improvement. It also helped us submit a version we developed by reviewing this ‘peer’ review feedback and incorporating some (but not all, as one does) suggestions. Our end product was simply better. We strengthened our paper by doing a preliminary critique, which is something I’ve seen many in the higher education community having students do to improve their writing in thought-partnership with AI.

All the Little Bits of Publishing

We were also able to use AI to help with all the lingering tasks of publishing that are often required to actually cross the finish line. Our abstract was too long and needed to be trimmed. A perfect task for AI. I needed to generate 4-8 keywords to include; perfect for AI. When I was reading through the draft and felt like I had a weaker argument or could state something better, I could ask it for a few options and that would show how I could revise. When I felt like I was losing a thread or crowding out an argument, I could ask it for suggestions of where else I might make this point or how to keep things connected. When I wanted to think about a paragraph or section from a different perspective, it could offer a version of that. It also kept tabs on those author guidelines, helping us produce a draft that was in line with those guidelines rather than having to go back and revise to fit the guidelines.

Finally, when the reviews came in, I was able to give it the reviewer comments and identify in the draft specific places we could address each one. When the revision was finished, I asked it to compare the versions and help me compile points for the letter with the revision submission that identified what changes we’d made. Again, it helped with the process, but we still did the work.

In all cases, it wasn’t just producing content that I’d copy and paste into the document. It was acting as a mirror…reflector…prism? I was volleying ideas to it and it would volley things back. I could take what I want, leave the rest, but walk away with helpful suggestions that helped the (often painful) process of academic publishing remain nimble, interesting, and generative. We collectively still touched every word of the piece, but we had another tool in the mix.

Reflecting Back on the Process

Of course, I also did one of my favorite things to do at the end of a process or experiment with AI: I asked Claude to look back on our threads and give a summary and assessment of what we did. It outlined everything I’ve shared above, but it also completed its analysis with a few helpful insights.

In terms of unique aspects of this approach, it argued that I used AI in ways that created (in its words):

Transparent Attribution: Carefully tracked which ideas came from which sources

Respect for Co-authors: Preserved their unique voices while planning integration

Critical Engagement: Didn’t just accept AI outputs but interrogated them

Practical Focus: Consistently pushed for actionable, specific guidance

Recursive Improvement: Each phase built on previous work

Meta-Awareness: Asked AI to reflect on the process itself

And, given its tendency to please, it even incorporated the core framework of our paper into its review of the process. SO META.

Aw, thanks Claude.

Was this efficient? Heck yes. Way more than any other paper I’ve worked on! But it was also different. It felt like we were really able to draw on one another’s strengths and unique perspectives and that the result was better for it.

AI served our collaboration by helping us see connections, build on rather than just compile ideas, and maintain coherence while preserving individual voices.

Ultimately, this was a successful experiment.

But, it also raises questions:

How do we ensure AI amplifies rather than flattens diverse voices? What techniques did I use here that could inform collaboration, collective impact reporting, and even OAR practices? What might I try next?

What happens to the messy, generative friction of humans in a room hashing out ideas that sometimes produces breakthrough insights? What did my co-authors and I lose in this process? And, was the trade-off worth it?

Are we creating new forms of intellectual dependency? Or, are we just leveraging this technology to jump through the rather arduous hoops of academic publishing (this journal was great to work with, but that’s definitely not usually the case) — hoops that often get in the way of our actual good thinking and sharing it with the world?

I’m not sure. But if you try this, I’d love to hear what you think. I feel like I used AI as a thought partner and didn’t outsource my thinking to it, but leveraged it to help me get through a process where I wanted to ensure I’d done the best job I could.

Small Experiments You Can Try

If you’re curious about AI-amplified collaboration (and also a bit wary, like I was), here are some micro-moves:

This week: Take notes (or ideally a transcript) from your last team meeting and ask AI to identify themes, tensions, and connection points across people. Share it as a conversation starter, explicitly noting it’s AI-synthesized. See what happens.

For your next collaborative project: Before dividing up work, create a shared knowledge base using AI to synthesize background materials. Then develop questions that help each team member build from that foundation. Works great for hosting webinar panels, too!

When feedback comes in: Use AI to map feedback to specific sections and identify patterns. Keep human judgment central but let AI handle the organizational heavy lifting.

💡Did you know?🌱 Helping mission-driven leaders, teams, and organizations thoughtfully and responsibly adopt AI is what I do! If you’re ready to build clarity and alignment around responsible AI use, please reach out!

To stay up-to-date on my writings across platforms, please join my mailing list (~one email per month!).

It was a fabulous experiment. It was neat to see what the tools (claude etc) already knew about us and our bodies of work! It really helped us work our Lane.

This is a brilliant collaborative process