Finding my 'why' in the midst of AI

...and why I can't sit by and watch mission-driven folks sit this one out.

I’ve been writing a lot about AI on both Substack and LinkedIn, but one thing I haven’t shared yet is my “why” — what it is that leads me to feel so strongly about AI, why I want to help mission-driven folks figure AI out, and what experiences give me a unique lens on these challenges.

Sharing one’s why is a pretty vulnerable exercise (at least for me). Writing this has taken me months and a fair bit of self-inflicted intellectual agony. I actually wrote the first draft of this by hand. Yep. Old school. It felt like the kind of thinking I needed to do, and it also felt like a necessary antidote to all of this AI “brain rot” stuff.

Ultimately, this was an affirming exercise (as my brand coach, Annie Franceschi, said it would be). As a solopreneur, it can be really challenging not to feel like I need to be everything to everyone in order to be successful. Finding your ‘niche’ is one thing, but believing in it and believing that your ‘why’ IS what makes you special and gives your perspective value is really hard.

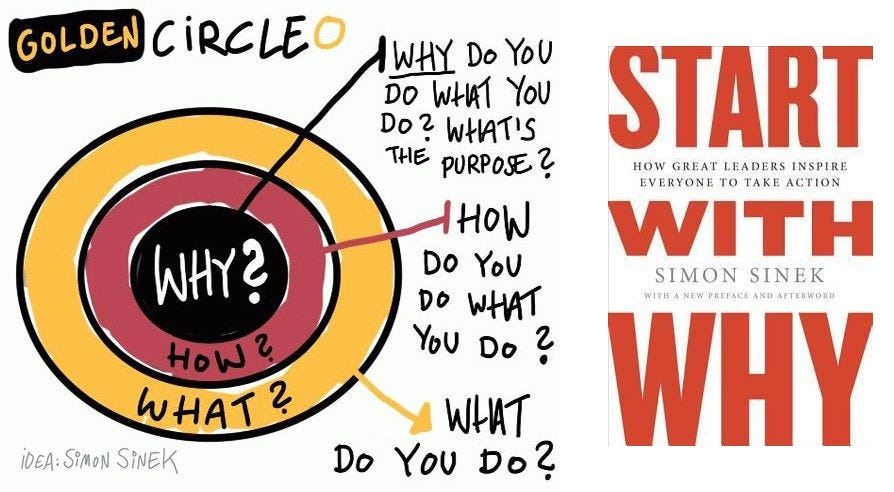

But if I go back to my ‘foundations of epistemology’ course in graduate school (still perhaps my all-time favorite class), I know that sharing one’s ‘why’ is important. It helps people understand the values, lenses, biases, and energy you bring to the topic you're interested in, the discussions you’re having, or the work you’re collaborating on. In the framing of Simon Sinek, it’s at the core of how I do what I do and what it is that I do. Sharing my ‘why’ of AI with you feels important because it informs everything I write here and how I work with clients. I’d love to hear your why — whether it’s your ‘AI why’ or another why. Thanks for sharing in mine.

Ps. Each and every delightful little em dash was thoughtfully placed and typed by yours truly.

Why I Can't Watch Mission-Driven Organizations Sit This One Out

I keep watching some of the brightest, most conscientious, and values-driven people I know turn away from AI entirely. I see foundations and organizations with incredible potential to shape how we use this technology, and with the opportunity to enable nonprofits and communities to benefit from it, either stalling, enacting bans against it, or deciding it isn’t relevant to them, either internally or externally, in their work with stakeholders.

Honestly, it breaks my heart. Not because I'm an AI evangelist (I'm not). On most days, I feel both a sense of doom/gloom and a feeling of wonder/optimism about it. And I definitely don’t want my worry or concern to come across as shaming anyone. There are some very good reasons to sit this out and, like all individual choices, I respect that everyone’s calculus is different. And, I think there’s an incredibly valuable role to play for those who are sitting it out and critiquing it (as I’ve written about here). However, when it comes to mission-driven organizations and the people who lead them, I expect different. From my point of view, it's precisely BECAUSE of who they are, the missions they lead, the values they hold, and their commitment to society (and our planet) that they need to be in this conversation. And this conversation — the AI one — isn’t one you can really be a part of if you aren’t using and understanding the technology itself.

What I See

The nonprofit sector is in a moment of rupture. There are political, systemic, and structural attacks on both the mission-driven side as well as the operational side. Social sector programs and organizations, designed to fill gaps in our society, are now subject to threats and surveillance over their content and programming. This threat could impact their tax status and have a cooling effect on donations and funding. The parallel attack on philanthropy, as well as the gutting of federal agencies that provide significant grant-based support, means that nonprofits today face the usual uphill battle of "doing more with less" while being existentially threatened and operationally and financially undermined.

Navigating AI can compound it. However, in rupture, there is also possibility. This can be a moment for identifying values, doubling down on them, and then experimenting thoughtfully and intentionally, while figuring out how to navigate both the AI disruption and the broader rupture in our social fabric.

What really motivates me in this space is this: Tech giants aren't motivated by mission. We need mission-driven folks and the organizations they lead getting in there and doing what we do best: innovating, advocating, collaborating, and building. We can't do that from the outside. It feels a bit like ‘winter is coming’ for my GoT fans, but the tech bros are playing an Iron Throne game, and we can’t influence that from beyond the Wall. And while there’s a lot to be afraid of with AI, if we don’t get in there and lead with values over power, I’m not sure who will.

Why I See It Differently

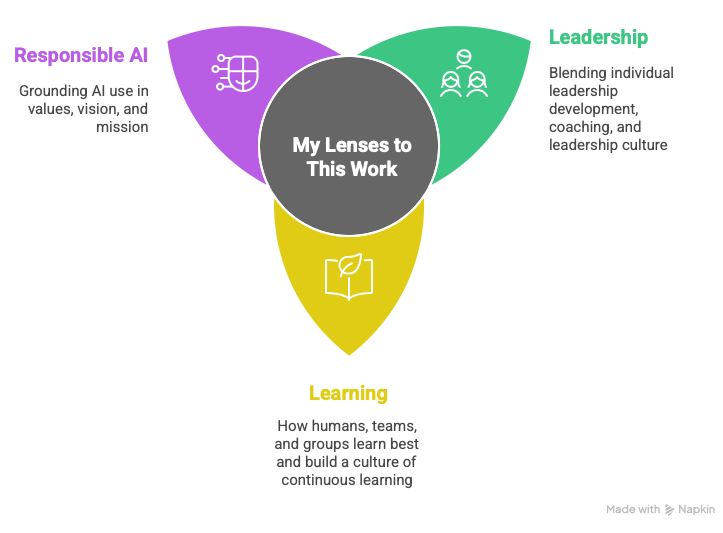

I decided to spend time writing and thinking about my "why" and sharing it with you because I realize my why is inseparable from HOW I see this moment and the ways in which I want to work with people and organizations. I see my work as sitting at the intersection of leadership, learning, and AI. But that only makes sense because of how I've come to understand each piece.

My Leadership Lens: It's About Identity and Social Processes, Not Authority

For leadership, I think of it primarily as a verb. After so many years at the Center for Creative Leadership, I’ve come to believe deeply that leadership is a social process of building direction, alignment, and commitment, as they define it. Leadership, as a process, is inherently different from leadership as a “thing” or a position. It isn't something someone has or doesn't have. It is something they do and continue to do, over time and in relationship with people.

And this is where my psychology background comes in. My academic background is focused on human development. Specifically, I studied adolescent identity development and how different contexts shape our individual and interpersonal experiences. This gives me an understanding of how humans see themselves as part of the social world, what it means to them, and the power these identities have over human behavioral choices.

Many jobs, occupations, and careers are associated with specific social identities. We see ourselves as a "doctor," or "nonprofit professional," or "fundraiser," and that means something to us. The work that we do, the things we produce, the value we add become a part of our sense of self (whether it "should "be that way or not is a whole other discussion). Because Al makes that work look "magic", simple, or easy, it makes us feel replaceable. That fear isn't just pragmatic, it’s existential. Leaders must navigate that fear for themselves while implementing changes in their organizations, navigating the aforementioned ruptures, and helping their teams and colleagues navigate those feelings as well. No wonder it's tempting just to say “no”! To say yes invites so many additional layers of complexity and humanity. And it feels high stakes. If you focus on the popular press, Al is going to transform the way we live. Sounds like a moment to not mess up, right?

But my coaching training gave me yet another lens on human development and leadership. As a CoActive trained coach, I learned to approach everyone as "NCRW" [naturally creative, resourceful, and whole]. Those of you with an ‘appreciative inquiry’ background will also recognize that idea. And I find that this is especially critical with AI, where there is a lot of fear (that is also very identity-based) at play. We need to approach people and organizations as if they are naturally creative, resourceful, and whole — that they have the skills, tools, and mindsets within them, their values, and their beings. So much of what I see in the AI media landscape and even in training is very deficit and fear-based. I bristled every time I hear “AI won’t take your job but someone who uses AI will”. Yes, fear is a powerful motivator, but I truly believe what people like Ayana Elizabeth Johnson say, that to truly build a better future, we have to motivate people, not with fear, but with what might be possible if we get this right.

My Learning Lens: It's Not About ‘Typical’ Corporate Training

Likewise, my approach to "learning" is also multi-faceted. As a former academic, I studied feminist pedagogy, multicultural/multi-lingual classrooms, hybrid and ‘flipped’ higher education models, and experiential learning. That work helped me understand the constructive space of learning, and how we learn in relationship with others, through dialogue, experience, and hands-on experiments with tools in ways that build in reflection, scaffolding, and extended learning.

AI training that is one-way, scripted, and predetermined is unlikely to meet these requirements. What is especially challenging is that we have to train people on something that is existentially threatening, yet it works on computers and thus "feels" like a calculator or computer program, but that doesn't actually work that way at all. Not only that, but for people to really leverage the tool, it requires them to be relational and build their own muscles of discernment and specificity while at the same time allowing for imperfection and experimentation. Talk about the "messy middle"!

Additionally, the tools are developing rapidly, making it challenging to keep up. Yet, at the same time, there are some "evergreen" concepts that we can "upskill" people to understand so that they can apply those concepts to new tech as they encounter it. Ultimately, in training for AI, we also have to help people overcome their initial hurdle with AI. That's often just having a good first experience as a sort of "unlock". Training should enable people to use the tools and experiment quickly, while also understanding the risks. We can't do a ton of "talking at" — it has to be more "guiding through".

But I also see learning as part of a bigger equation. While adult learning and development is central to training around AI, there are organizational and cultural practices of learning that really need to be central in every AI implementation, strategy, and training. My lens on this macro-level approach to learning is informed by my training in Emergent Learning. This is a set of processes for how we help groups of people learn across time as efficiently as we often learn on our own.

Emergent Learning (EL) helps people across a system think, learn and adapt together in order to achieve important social change goals.

This means bringing an active learning and experimental mindset, along with the tools to match, to AI experiences. Rather than dropping in and out of a system or organization, it's about building the capacity of the people in the system to continually learn. How can we equip leaders, teams, and organizations to critically examine their processes of learning and innovation, and determine how to build and support them as they navigate the adoption of AI? Moreover, how might AI actually enable better, more efficient, and effective organizational learning practices? And, what might the powerful lens of Emergent Learning — which values diversity of thought, expertise across power and position, and an experimental mindset driven by questions and hypotheses — have to offer the development and implementation of AI?

Often, I see organizations view learning as a 'nice to have.' With AI, this is a mistake because we need both a learning orientation to cope with AI, while AI can also help with learning in many ways.

The AI Lens: Pragmatism Over Polarization

Finally: AI. I am neither an optimist nor a pessimist. I'm a pragmatist. Quite literally — when I took the Change Style Indicator years ago, I was smack in the middle of Conserver and Originator, an exact Pragmatist score. When it comes to change management, pragmatists live right in the middle of the change continuum. I don’t default to chasing shiny new tools or vehemently cling to the way things have always been. Instead, I thrive in the in-between: asking what works, what matters, and what’s possible given the reality we’re in. My strength is integration. I can speak the language of visionaries and system stewards alike, helping teams navigate uncertainty, balance risk and innovation, and move forward with grounded momentum. For me, it’s never change for change’s sake — it’s change in service of learning, leadership, and impact. This has been true throughout my career, and I see it resonating so much with how I approach AI.

When it comes to my specific thoughts on AI at this moment, I believe that approaching AI responsibly isn’t about wholesale labeling tools as “good” or “bad.” Instead, it’s about making intentional, values-aligned choices in how (and if) we use them. Ethics in AI isn't a “checkbox” or a one-and-done policy; it’s an ongoing commitment to curiosity, discernment, and context. The work I do with AI in the social sector is to help mission-driven leaders navigate this complexity not by handing them a rulebook (sorry, those of you who want that!), but by creating space to ask the right questions, explore practical boundaries, and experiment safely. My goal is to move past hype or fear and into a more grounded and pragmatic conversation — one where technology serves people, values, and missions, not the other way around.

The Opportunity I’m Worried We’re Missing

I also think that at this moment, there is an opportunity for a different kind of leadership to lead AI (and, relatedly, systems change). I strongly believe in the networked, complexity science framing of leadership, organizations, and change. And because AI consists of very real identity-based threats, it requires a different kind of leadership. One that understands people, relationships, networks, and power.

Al strategy and change management needs to live in or, at the very least, involve your culture department. It can't live in IT or HR alone. It isn't a straightforward tech upskill, and the threats to roles and job security complicate the role HR can/should play. Not to mention the level of access to this technology that I’ve written about before, and how that has a democratizing effect, allowing everyone to have access to this tool in their pocket. The very high likelihood is that the intern knows more about it and how to leverage it than the CEO.

This is also a moment to examine the learning culture within your organization — the way you actually learn, refine, codify, revise, and share learnings across your organization — and use this AI moment to take a closer look and see what's happening and what could be improved. As I've said in other posts, there is a completely different network at your org that will influence AI adoption. Whether or not you decide to find it, understand it, and leverage it (versus dismantle it, which is futile) is up to you.

This is why I feel so strongly and see so clearly that there is a huge opportunity for organizations and their leaders to capitalize on the AI-driven moment to assess their leadership capabilities, learning practices, and organizational culture all at once. And, in the process of understanding those things, utilize AI adoption as a means to teach new skills, establish new practices, and implement cultural changes where needed, rather than merely seeking to operationalize existing practices.

I Want To Build Differently

Lately, I've been saying that "I'm not a builder, I'm a people mover" — and something about that hasn't felt right at all. It's true that I'm not a tech builder. I can't really code, and a lot of the back-end tech stuff is beyond my reach (and, frankly, desire). But I do build. I help people build their leadership, confidence, culture, practices, policies, processes, and vision for the future.

With CCL, I built a train-the-trainer intro course to get people to dive in with an organization-supported generative AI tool. It was fun, engaging, and immediately applicable, enabling the AI committee to go out into the organization and conduct the training themselves. With independent consultant clients, I'm meeting them where they are, tending to their values and concerns, and helping to guide them through the hype. With organizational teams, I’m focused on alignment. Not ‘model’ alignment, but ‘human’ alignment: How do we understand the different values, fears, and concerns? What can we all agree to, and how can we support one another? What is the role we want this tech to play, and how will we engage with it in ways that serve our work?

So, maybe, when talking about my why/what/how, I should stop saying that I’m not a builder; instead, I should say that I'm an “AI power user, culture builder, people mover”.

To me, that's what a transformative moment is. Not just surviving the disruption, but using it as a catalyst for the change you've probably needed anyway. And a big part of me hopes that in figuring out what it might look like to thrive amidst this disruption, we actually create the systems and change we’ve worked so hard for, rather than succumbing to yet another exploitative technology. Winter IS coming, but it doesn’t mean we have to freeze.

My ask of you: Stop and think about where you are. What's your ‘AI Why’? What else do you need to explore? Where do you feel unclear about how to live into it? It's possible that this is a "play with fire and you're gonna get burned" scenario — but it's also possible that understanding fire and its potential (to warm, illuminate, and even destroy) and figuring out how to leverage it, may be a source of energy, if we can use it and carry it responsibly.

Sidebar/Tangent: Playing with AI to generate visualizations and metaphors

My ChatGPT thinks this is a good representation of me and my approach to AI. What do you think?

Interestingly, I had an exchange with Claude (accidentally in Opus4 and quickly hit the usage limits) about how it would frame my approach to AI in terms of Game of Thrones. At first, it identified me as a mix of Tyrian, Sam Tarly, and Davos. Mildly flattered, I pointed out that it chose all male characters. It then accepted that I was actually “more like the women who actually got things done” (🤣) and identified me as a mix of Olenna Tyrell, Brienne of Tarth (yes!), Margery Tyrell, and Arya Stark (naturally — “trained in many disciplines, belongs to no single house anymore, uses all her skills to protect what matters”). BUT, when I pointed out things didn’t end well for the Tyrells, it landed on this…what do you think? (PS, this is one of my favorite ways to use AI — metaphors galore!).

It rightly called out that perhaps GoT is a bit dark and not in line with my brand identity (touché, Claude), but when I reminded it we live in dystopian times, it relented.

Bonus Content: Examples from my “How I Used AI This Week” Series

(click to expand!)

💡Did you know?🌱 Helping mission-driven leaders, teams, and organizations thoughtfully and responsibly adopt AI is what I do! If you want to learn more, please reach out!

To stay up-to-date on my writings across platforms, please join my mailing list.

Great read! I started learning about AI as an exercise in self-defense after I attended a meeting in which several execs talked gleefully about replacing writers (like me!) with ChatGPT. Now that I’ve taken an ML class and even built several tools with it, I’d characterize myself as a pragmatist…it’s legitimately useful for some things, ineffective at others, and dangerous in some entirely novel ways.

Like you, I’d rather see nonprofits and other pro-social folks help to shape the conversation around AI now rather than wait for something truly (or even more?) awful to happen.

Hi Valerie, thoughtful post. I share some of your values mentioned in the post, I also am interested in leadership, learning and the evolving understanding and use of AI. However I wouldn't place AI as a third interest equal to leadership and learning, for me the third element is life, of which AI will be only a part. Like how we live life, AI can lift us up or take us down, it depends. As you note it has good potential to help. though for me it needs to be viewed from the broader context of human life, and then probed for how it can help. Hope these thoughts are useful.