The E⁴ Framework: A Guide to Intentional AI Use in Mission-Driven Organizations

How to achieve clarity for AI integration in your work as an individual, team, or organization

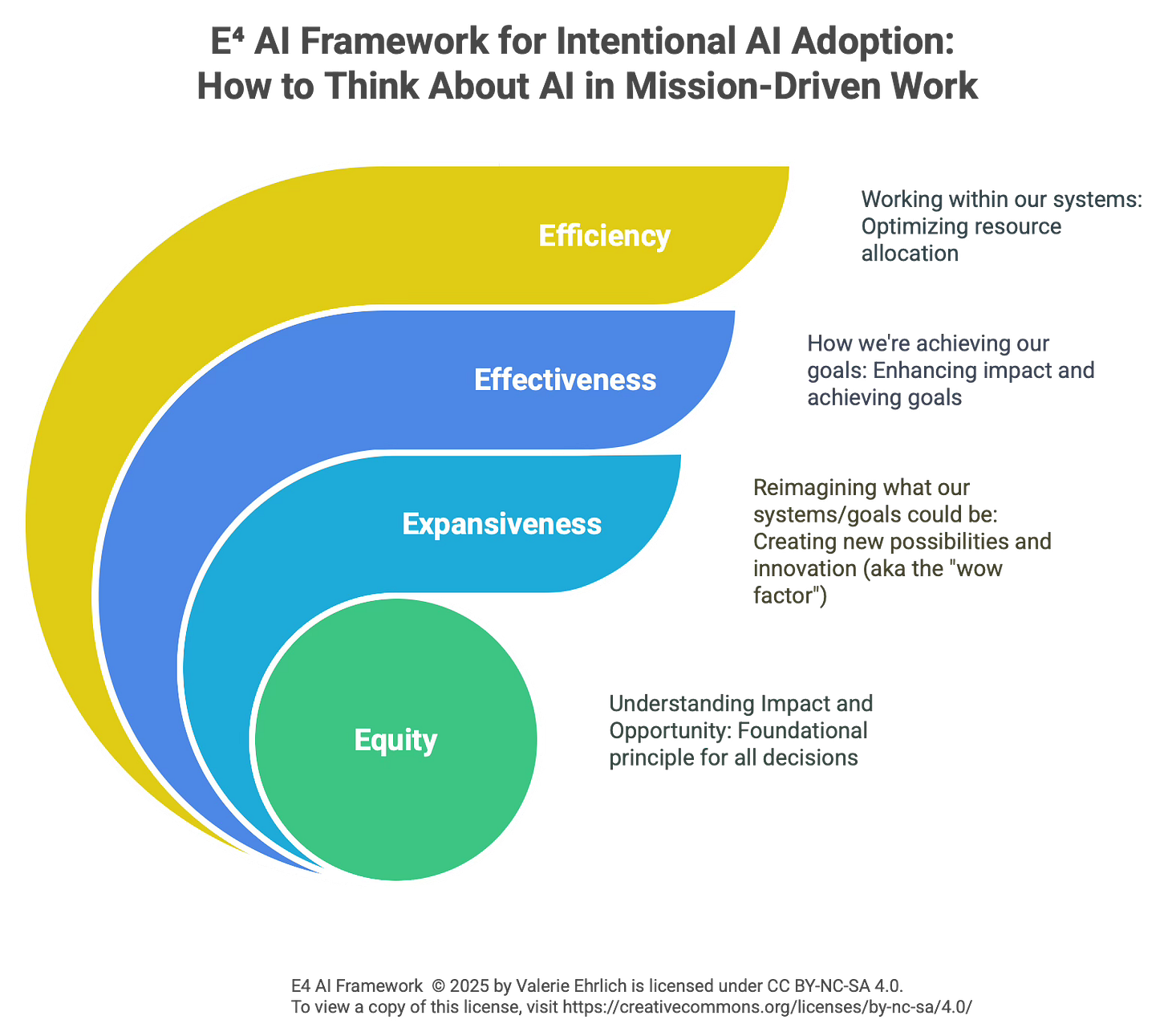

TL;DR: How you frame AI in your work or for your organization shapes what becomes possible with it. The E⁴ Framework for Intentional AI Adoption helps mission-driven leaders and organizations move beyond efficiency-only thinking to explore effectiveness (doing the right things better) and expansiveness (reimagining what's possible), all while centering equity in every decision. It's about creating clarity in uncertainty, not chasing certainty.

How Are You Thinking About AI?

It seems like a simple question, but I find that so many people are thinking about AI in very limited ways. We’re often just considering how it could be a time-saver or reduce our workload. Or, worse, how it might enable us to cram even more ‘productivity’ into our hours. Most of us are a long way off from considering the true “augmentation” or “amplification” that writers such as

talk about. And that’s just those of us that are excited or intrigued by AI. There’s an entire other swath of our colleagues who aren’t even considering AI or don’t want to consider it.The way we think about AI matters because our perceptions shape our motivation to engage with it. At an individual level, it may not have a huge impact. But, at an organizational level — especially if we lead teams, departments, or entire organizations — how we’re thinking about AI is going to have ripple effects across our organization and frame the perceptions of those we work with and how they will think about the role AI can or should play.

Framing matters for your staff because it influences perceptions, motivation, and imagination regarding AI and its potential to help mission-driven organizations create the social change they are working so hard to achieve. But, many leaders I see skip the framing question and go straight to trying to identify the solution. Jumping to develop a policy or identify the ‘one AI tool to rule them all’ rather than pausing to get intentional and clear about the what and why.

The Danger of AI-First Without AI-Why

I’ve written previously about the challenge of “AI-first” memos and mandates. While aspirational, those types of memos often lack the guidance and clarity of what that will actually look like in practice. It puts the business outcomes over the human-centered side of leadership. But more importantly, in terms of change, it identifies the goal without the multiple pathways required to get us there.

Skipping intentional framing of how we think about AI and how we want to use it also means we might limit our potential vision for AI in our work. Emphasizing “efficiency” as the primary outcome for AI leaves a lot of potentially transformative use cases on the table at best, and at worst, it makes people feel like the goal is job reduction.

Finally, skipping framing shortchanges alignment. A key ingredient to good leadership is understanding and being aligned on where it is we’re trying to go (see CCL’s definition of leadership as a social process aimed at achieving direction, alignment, and commitment, aka D-A-C). Right now, when different stakeholders hear “AI”, they are probably imagining very different things, many of which might be impossible, unreasonable, or not really all that useful.

There is a ton of uncertainty in the world right now, and AI is no exception. Right now, for mission-driven organizations, the goal shouldn’t be to reach certainty about AI — it should be to collaboratively create clarity. How can we start working toward clarity when we’re all coming at this topic with a wide range of perspectives and experiences, and when we’ve got to get lots of different parts of our organizations in alignment so that we can successfully deploy tools to our staff?

I think one way is to get intentional about the actual problems we’re trying to solve, why it’s important, what it will mean for our impact, and how we will know we’re making progress toward those goals. I’m sharing with you one tool, the E⁴ AI Framework for Intentional AI Adoption, that can help build that clarity. I will refine this over time, so your input and experiences using this will be incredibly valuable.

Introducing E⁴: A Framework for Intentional AI Adoption

This framework has evolved over the last several months. It began when I saw

talk about examples of “leading” versus “following” AI, which got me thinking about how we categorize AI use cases and how I was seeing organizations talk more about efficiency than effectivness. I then had a conversation with where he challenged me to move beyond those two and not forget about the expansive, transformative, “what might be possible?” cases that initially ignited my excitement about AI. Finally, I’m working with on incorporating this framework into a paper we are writing on Oral and Alternate Reporting (OAR) in grantmaking. It was through that partnership that I realized the need to add a cross-cutting layer of equity to the entire framework. I’m giving you that backstory because this thinking has been developed primarily in collaboration with humans, along with some helpful back-and-forth exchanges with AI (specifically me asking Claude to ask me questions that I then record voice memos about). There are a lot of folks claiming to be experts in AI, but the fact is we’re all figuring this out as we go and the best ways to figure it out are in dialogue with really incredible thinkers who push our understanding.The goal of the framework, described below, is to help individuals, leaders, and organizations answer: "What are the pain points in our work that we're trying to solve for, what might we do better if we brought in AI, and how should we do so in a way that aligns with our values?"

The Three E's: Working In, Through, and Beyond Your Systems

Efficiency: Making Space for What Matters

Efficiency involves getting tasks done with the least amount of time, energy, or resources. It focuses on speed, cost reduction, and resource optimization. In practice, efficiency looks like using AI to: reduce administrative burden, free up time for more meaningful work, streamline repetitive processes, make better use of limited resources, and remove friction from necessary tasks.

Ideally, efficiency would not be about using AI to: enable a reduction in force/layoffs, just “do more with less”, cram more into already full schedules, cost-cut at the expense of achieving the mission, or improving speed for speed’s sake. It also matters who is talking about efficiency. If the aspirations for efficiency are only coming from the C-suite, chances are people are going to be worried about layoffs. But if the desires for efficiency are being driven across roles and levels, it may be both less threatening and more likely to be practical and relevant to the day-to-day work.

Examples of efficiency include automating donor emails, creating meeting summaries and automated notes, leveraging transcription and translation tools, proofreading for inclusive language, and conducting basic data analysis such as theming feedback or segmenting donor data.

Efficiency is often where we start thinking about using AI. And, it is full of very valuable use cases. But it isn’t the only way we should be thinking about it.

Effectiveness: Amplifying Your Purpose, Not Just Your Output

Effectiveness is about how we’re using AI to achieve desired outcomes such as: helping nonprofit leaders make better decisions, predict trends, and align AI with mission-driven goals. I think ‘effectiveness’ use cases are the ones a lot of nonprofit leaders are craving because they tap into our ability to truly be in alignment with our goals and our own expertise when using AI. Effectiveness is what helps us more naturally say, ‘what is it I’m supposed to be doing in my job, and how might I be able to do it even better?’

Effectiveness looks like: enhancing impact and outcomes by collaborating on program design, making better strategic decisions by being able to leverage historical sources and documents, improving the quality of your work by offering a practice space or sounding board, aligning efforts with the mission so you see how AI is enabling your work, and ultimately, deepening resonance and connection by allowing you to focus on the human-centered and relational aspects of your work as a leader.

What differentiates effectiveness from efficiency is that it is about focusing on improving what you’re already supposed to be doing (or what you’d like to be doing). It is explicitly about bringing in expertise, judgment, quality, and strategic alignment in terms of your use of AI. Therefore, it has a natural connection to purpose. In this way, it might actually be easier to implement and envision, because it builds from our core roles and responsibilities. While many of the use-case ideas lean toward that more ‘amplified’ and ‘augmented’ method of leveraging AI, once people start experimenting, they often begin to spark ideas related to effectiveness.

Expansiveness: Co-Creating Futures We Can't Yet Imagine

Expansiveness is a way of thinking about AI that expands possibilities, inspires new ideas, and builds motivation by revealing opportunities, transforming engagement, and co-creating the future of mission-driven work. Expansive thinking helps us engage one another in the bigger vision of “what might be possible” for AI and our sector (to borrow a brilliant question from

). As Rachel Kimber noted in a recent talk, this is how we go from VHS to Netflix to streaming. What’s the equivalent for your organization or for the wicked challenge you’re working so hard to solve?Expansiveness looks like reimagining what's possible in terms of solutions to address the challenges we’re working on, creating entirely new approaches that might not have been possible at scale, solving previously unsolvable challenges using technology, or envisioning transformative change rather than incremental change. It isn’t tech solutionism or innovating for innovation’s sake. It’s about really engaging with possibility and thinking ‘outside of the box’ for solutions.

This kind of thinking is always a cognitive stretch for a lot of us. It asks us to be generative and imaginative. Frankly, that can feel incredibly hard these days when there is so much uncertainty, so much suffering, and the fate of our organizations and the ability to meet the needs of our stakeholders is under constant threat. We can both use AI to aid us in thinking expansively as well as challenge ourselves to think expansively about how we might use AI to address our organizational challenges and societal issues.

Through an Equity Lens: Who Benefits, Who Decides?

One limitation of frameworks is that they often need to be simple, two-dimensional guides that favor utility and application over theoretical complexity and visual aesthetics. How to position equity was a challenge I’m still not sure I’ve solved. It is certainly an “E” worthy of its own unique place in the framework, but it isn’t just an add-on as a fourth category. It is the scaffolding that surrounds the entire framework, the skeleton that supports the body. The core goals and questions of equity can and should cut across the other three categories.

Equity in thinking about and leveraging AI in organizations looks like using it as a lens through which all decisions are made, actively questioning issues of power and access, redistributing benefits and burdens, centering marginalized voices, and examining (and remedying) built-in biases.

When equity is present in our thinking it changes the questions we tend to ask, reveals hidden assumptions and/or gaps in our knowledge, and drives our AI use toward justice, not just fairness.

Key questions to use when adopting an equity lens include:

"Who stands to benefit? Who doesn't?"

"Who's present at the table, who's not?"

"Whose challenges, whose “problems” are we solving? Why? Whose problems matter?"

We often think of equity as requiring huge systemic solutions, but applying equity as a lens can happen regardless of the size, scale, or scope of the initiative. For example, an efficiency use case as simple as automated note-taking, when examined through the lens of equity might have us asking if we’re going to give access to that tool to everyone in the organization, or only specific roles (and why or why not?).

The Ripple Effect of How We Frame AI

As I mentioned earlier, one reason to focus on framing is because it influences the perceptions and motivations of your staff, colleagues, and stakeholders around AI’s potential for your mission-driven work. But a more tangible and practical implication is that when we’re only thinking about one type of AI use case, we might be selecting tools, writing policies, or rolling out practice guidelines in very narrow and limited ways. A policy that is written for efficiency is going to lack guidelines and guardrails and possibly limit the potential for effective or expansive uses. It can be fine to start with this, but the E4 framework shows us where we will want to consider deepening our knowledge and revising our policies so that we continue to adapt to AI’s potential in our work.

Ultimately, this is about being intentional about what it is we’re trying to do with AI and why. If you are framing the different uses of AI in ways that expand people’s thinking, they’re able to think about what they are doing with AI, how it maps onto the organization’s broader goals, how it relates to their job function or tasks, and what it could potentially do in their day-to-day work of supporting the mission and vision of the organization.

But it also matters for measurement. Nearly every week there’s another headline about how AI is falling short of “ROI”. But…ROI for what? For mission-driven organizations that often aren’t in the business of manufacturing widgets, the ROI is always more complicated. Understanding the ROI of how AI can help us achieve mission-driven goals is probably not something we can easily put on a dashboard. But, when we get intentional about framing we can start to ask the right questions that will help us understand the metrics we should use to assess the impact of AI and how to improve our integration of AI into our work. We can identify where quantitative metrics can demonstrate efficiency, or how storytelling or narrative change might provide evidence of effectiveness. It lets us match our metrics to the purpose.

Putting E⁴ into Practice

Here’s what this could look like across different categories of ways mission-driven professionals and organizations might leverage AI. These are just some imperfect examples and I would really love to hear your thoughts and additions.

Data Handling

Efficiency: Automated transcription, meeting notes, translation

Effectiveness: Pattern analysis across meetings, identifying key themes, tracking decision evolution

Expansiveness: AI that surfaces unheard voices, connects insights across organizations, reveals systemic patterns

Equity Lens: Whose languages are supported? Whose communication styles are recognized? Whose voices become more visible through analysis? Who controls cross-organizational insights?

Reporting & Communication

Efficiency: Auto-generate summaries, SEO optimization, proofreading

Effectiveness: Personalized content for different audiences, impact storytelling, engagement analytics

Expansiveness: Community co-creates reporting formats, AI learns from stakeholder preferences, multi-directional communication flows

Equity lens: Who defines "professional" communication? Whose stories get amplified? Who participates in reshaping communication norms?

Project & Stakeholder Engagement

Efficiency: Task management, scheduling, automated updates

Effectiveness: Sentiment analysis, participation pattern insights, engagement quality metrics

Expansiveness: Stakeholders train AI on their needs, power-mapping reveals hidden dynamics, new models of shared decision-making

Equity lens: Who is considered a "stakeholder"? Whose engagement preferences shape the tools? How does power shift through these processes?

Fundraising & Donor Insights

Efficiency: Thank you automation, donor database management

Effectiveness: Behavior prediction, giving capacity analysis, personalized cultivation

Expansiveness: Donors and communities co-design funding priorities, AI facilitates participatory budgeting, wealth redistribution models

Equity lens: Who gets cultivated as a "donor"? Whose funding priorities drive strategy? How do we flip traditional power dynamics?

Grant Management & Compliance

Efficiency: Application screening, deadline tracking, report templates

Effectiveness: Impact forecasting, strategic grant matching, risk identification

Expansiveness: Inter-organizational grant collaboration, shared learning systems, collective impact measurement

Equity lens: Who can navigate complex applications? Whose impact definitions matter? How do we share power in collaborative funding?

Your Next Move: Making E⁴ Real

I wouldn’t be an academic descendant of Kurt Lewin if I didn’t note that ‘nothing is so practical as a good theory’ and, in this case, that means committing to making this framework actionable for you to use in your own work. Here are some ideas for how you might move from the conceptual to the applied, leveraging small moves along the way:

Look at your current AI experiments (or resistance) - which E are you focusing on? How is this framing shaping your decisions, choices about tools, or design of policies and guidelines?

Identify one well-known pain point in your organization - how might each E address it differently? What do you gain by thinking about the possible solutions through those different frames?

Ask the equity questions about any and every AI tool you're considering. That goes for use cases and experiments as well.

Share this framework with your team and discuss: What kind of AI have we been imagining? How does it serve us? How might it serve our mission?

Moving Forward with Intention

Ultimately, “success” with this framework looks like holding all of these things in mind as you and your organization navigate AI. It’s about recognizing and being intentional about what you’re saying about AI, how you’re deciding to use it, and what you’re prioritizing (or not). It also looks like revisiting the ideas presented here as new tools and features arise. Frameworks can ground us amidst the hype, offer a way of building alignment, and help us speak with clarity about what it is we’re trying to achieve. I hope that this framework also allows you to leverage your mission, values, goals, and vision as you identify where you do and don’t want AI involved.

I will continue to refine this in my work with clients and I’d love to hear your feedback if you use it. I’ll also be working on a downloadable version and will be sure to share that with subscribers (all subscriptions are free).

💡Did you know?🌱 Helping mission-driven leaders, teams, and organizations thoughtfully and responsibly adopt AI is what I do! If you want to learn more, please reach out!